On the post in which I resorted to flowcharts to try to unpack people’s claims about the process involved in building scientific knowledge, Torbjörn Larsson raised a number of concerns:

The first problem I have was with “belief”. I have seen, and forgotten, that it is used in two senses in english – for trust, and for conviction. Rather like for theory, the weaker term isn’t appropriate here. I would say that theories gives us trust in repeatability of predicted observations, and that kind of trust counts as knowledge. In fact, already the trust repeated observations gives count as knowledge.

The second problem I have is with “the problem of induction”. Science has a set of procedures that observably generates robust knowledge, and the alleged problem is seldom seen. When the terrain and the map doesn’t agree, junk the map.

The third problem I have is with the specific diagrams. Real scientific knowledge production will not yield to any one diagram. So for the philosopher that raises a hypothetical “problem of induction” we could turn around the question and ask why the obvious “problem of description” (which ironically is a real problem of induction 🙂 isn’t bothersome. The scientist answer would probably be as above: “e puor si muove”.

… Without feeling like testability is the end-all of science the diagram is slanted away from testing towards a weaker and in the end nonfunctional descriptive science. Whether we call tested knowledge “a conclusion” or “a tentative conclusion” is irrelevant IMHO, it is a conclusion we will (have to) trust in.

The fourth (oy!) problem I have is with the conflated description the diagram alludes to. In the text there is a distinction between individual scientists and the scientific enterprise. Different entities will obviously use different approaches to knowledge, and if the individual doesn’t need to trust her findings the enterprise relies on such a trust.

These are reasonable concerns, so let me say a few words to address them.

I’ll start with the fourth concern, the relation between what’s going on with the individual scientist and the larger community of scientists working together to build knowledge. While some thinkers have framed the problem of building objective knowledge as one that depends on each individual scientist being highly objective and switching off his or her own biases, others (including Frederick Grinnell and Helen E. Longino) have put theburden of objectivity primarily with the community — bias is stripped out of what ends up being identified as scientific knowledge when the community “checks the work” of individual scientists within it.

Myself, I’m inclined to think that the community has an easier time being more objective when each of the individuals within that community is doing his or her best to be aware of, and unmoved by, his or her own biases. This may be psychologically challenging, but it’s not impossible. Certainly, the process of trying to persuade other scientists that you’ve found something interesting puts you in touch with the idea that others in your community may not share your hunches.

So, it might be advisable to have different flowcharts for what the individual scientist is doing and what the scientific community is doing. But given that each individual scientist is (or might be) striving to be as hardheadedly objective as the community of individual scientists working in concert, we might be able to get away with using the community-level process as an idealized model of the individual-level process.

Torbjörn Larsson’s third concern is also related to the worry that a lot is being idealized in these charts — that the actual process of building scientific knowledge “on the ground” is messy and can’t be properly captured in a single road map. I agree. It’s best to think of the flowcharts as trying to capture the process of justifying scientific claims, not the process by which you come up with them or get the experiment to work or what have you.

And justification is the issue at the heart of the problem of induction, which seems to be the sticking point in Torbjörn Larsson’s second and first concerns. What is it that make a claim count as scientific knowledge? There is an operational kind of answer to this question: here are the steps you need to take to support your claim in order for scientists to regard it as playing a particular role in the scientific discourse (whether you want to identify the claim thus supported as “credible” or “convincing” or “the best available explanation of the phenomena” or something else).

But there’s also a bare-knuckles logical warrant sort of answer to this question, and this is where the problem of induction comes in. The problem of induction is a worry if you think knowledge ought to come down to claims about which you need entertain no doubts. If you want your claim to be unsinkable before you call it knowledge, then you can’t laugh the problem of induction off as a mere philosophical trifle.

The observations we’ve gathered so far don’t provide empirical evidence about the observations we haven’t yet made. As regular as the phenomena in the universe seem to be, we’ve only observed a fraction of all the things we could observe, and no one set of inferences we could draw from the data now in evidence is the only set of inferences that fit these data.

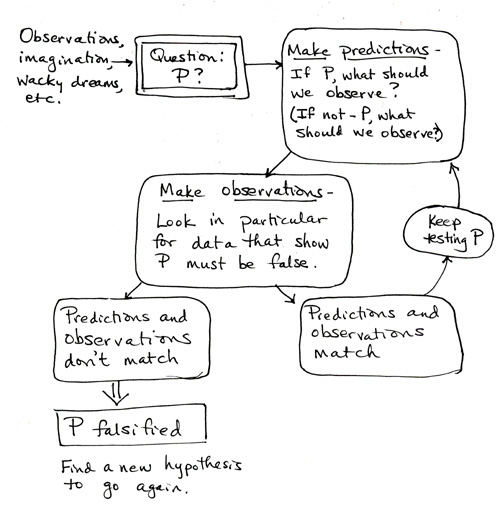

Sir Karl Popper didn’t see the problem of induction — that inductive inferences drawn from limited data could go wrong — as something that could be “solved”. However, he thought that the methodology of science avoided the problem by not identifying conclusions arrived at through inductive inference as “knowledge” in the strong sense of “there is no way this could fail to be true”. Here’s Popper’s picture of the process of building scientific knowledge:

Notice that Popper doesn’t think it matters all that much where your hypothesis P comes from. Maybe it comes from lots of poking around and observing your phenomena. Maybe it comes from that recurring nightmare of the snake biting his own tail. It’s not important. The thing that can make P a respectable scientific claim is that it is tested in the right kind of way.

How it is tested, for Popper, comes down to working out the observable consequences that would follow if P were true and especially the things we should not be able to observe if P is false. With these predictions in hand, you make your observations. If your observations don’t match with your predictions from P, they let you deduce that P cannot be true, and you achieve as much certainty as you can hope for. Since your conclusion that not-P is the conclusion of a deductive argument, you can bet the farm on it.

If, on the other hand, the observations match your predictions from P, Popper says that you haven’t established P with certainty (since you come to P at the conclusion of an inductive argument, and new evidence might undermine that conclusion). So, you go through the whole process again. You can’t, as far as Popper is concerned, conclude on the basis of all manner of successful observations (and an utter lack of observations that contradict P) that P is true — just that it has (so far) survived all attempts at falsifying it.

Does taking Popper seriously mean that scientists can’t ever draw positive conclusions? I don’t think so. The fact that scientists acknowledge that their conclusions are tentative and could be updated in the face of future data strikes me as an acknowledgment that they recognize that inductive inference doesn’t come with a guarantee. This recognition doesn’t mean you’re not allowed to use inductive inference, but rather that you have to be at least a little cautious about the weight you place on the conclusions derawn with it.

To the extent that using induction has generated pictures of the world that hold up to scientific scrutiny, inductive inference is a useful tool. Success to date is not, of course, a guarantee that inductive inference will always work, any more than the fact that the phenomena in our world seem reassuringly regular is a guarantee that they will remain so.

“Conviction” for a scientist, then, is not: “From this day forward, I am committed to P and nothing you could show me will ever shake my commitment to P.” Instead, we have something like: “Given the data amassed, and the stringent tests which P has passed, and the current lack of other claims that fit the phenomena as well and have held up as well to our testing, I’m committed to P. I’d be surprised if the situation were to change, but it could, in which case, I may update my view.”

For a belief to become a scientific conviction seems to require certain kinds of justification (from empirical data, theories, etc.). A belief without that kind of justification behind it is just a belief — nothing wrong with that, but it has no special status in scientific discussions. The problem of induction is concerned with what we can prove. It’s a matter of logic. To the extent that scientists find it fruitful to draw inductive inferences, they can, so long as they recognize (as they generally do) that the careful justifications that they offer don’t quite meet the level of deductive proof. Still, they are good justifications, and a claim backed by these will be on better scientific footing than a claim without such justifications.

This point was somewhat tangential to the main thrust of this post, but doesn’t the objectivity of the overall scientific enterprise often benefit from slight individual irrationalities? After all, if we really want to properly assess competing scientific programs (like string theory and quantum loop gravity and the like), it’s good to have some people that really believe one and some people that really believe the other, even though the data and theory aren’t yet well enough developed to justify the group in believing one or the other.

Of course, a possible response here is just to say that the scientist doesn’t need to actually believe her theory in order to work with it in the way that the community needs. Which might actually be a reasonable position, though it does sound strange when we run into creationist paleontologists.

How do we add flowcharts to comments?

In practice, somewhere in the “keep testing P” strand, you should add an “increase confidence in P” box.

Also, with regards to th ehand-drawn flowchart issue, does there exist flowchart software that is cheaper and easier to use than a #2 pencil?

(two cents: hand-drawn is more appealing and elegant. avoid software.)

Kenny, I think that’s true. Of course that’s advantage to speaking of a community of inquirers rather than focusing in on the epistemology of an individual. Science certainly lends itself well to a community effort but not necessarily the individual effort. Something Janet touched on in passing. Of course Popper in all this is echoing C. S. Peirce who was a fallibilist in the days of Newtonian determinism when it was much harder to be a fallibilist of that sort. However Popper’s fallibilism seems (to me) to be much more extreme than Peirce’s although his methadological criticism is quite interesting. (And much more defensible than most naive critiques of Popper suggest)

Getting back to your point, C. S. Peirce would actually say that when a scientist is ideally doing science he has no beliefs since beliefs imply care and concern about the results – something an ideal scientist ought not have.

I think that the following bears on our discussion of belief in the context of peer reviewed scientific publication.

Is Most Published Research Really False?

http://www.sciencedaily.com/releases/2007/02/070227105745.htm

Source: Public Library of Science

Date: February 27, 2007

Science Daily — In 2005, PLoS Medicine published an essay by John Ioannidis, called “Why most published research findings are false,” that has been downloaded over 100,000 times and that was called “an instant cult classic” in a Boston Globe op-ed of July 27 2006. This week, PLoS Medicine revisits the essay, publishing two articles by researchers that move the debate in two new directions.

In his 2005 essay, Dr Ioannidis wrote: “Published research findings are sometimes refuted by subsequent evidence, with ensuing confusion and disappointment.” He argued that there is increasing concern that in modern research, false findings may be the majority or even the vast majority of published research claims, and went on to try and prove that most claimed research findings are false.

However, in this week’s PLoS Medicine, Ramal Moonesinghe (US Centers for Disease Control and Prevention) and colleagues demonstrate that the likelihood of a published research result being true increases when that finding has been repeatedly replicated in multiple studies.

“As part of the scientific enterprise,” say the authors, “we know that replication–the performance of another study statistically confirming the same hypothesis–is the cornerstone of science and replication of findings is very important before any causal inference can be drawn.” While the importance of replication was acknowledged by Dr Ioannidis, say Dr Moonesinghe and colleagues, he did not show that the likelihood of a statistically significant research finding being true increases when that finding has been replicated in many studies.

The authors say that their new demonstration “should be encouraging news to researchers in their never-ending pursuit of scientific hypothesis generation and testing.” Nevertheless, they acknowledge that “more methodologic work is needed to assess and interpret cumulative evidence of research findings and their biological plausibility,” particularly in the exploding field of genetic associations.

In the second article, Benjamin Djulbegovic (University of South Florida, USA) and Iztok Hozo (Indiana University Northwest, USA) say that Dr Ioannidis “did not indicate when, if at all, potentially false research results may be considered as acceptable to society.” In their article, they calculate the probability above which research findings may become acceptable.

Djulebegovic and Hozo’s new model indicates that the probability above which research results should be accepted depends on the expected payback from the research (the benefits) and the inadvertent consequences (the harms). This probability may dramatically change depending on our willingness to tolerate error in accepting false research findings. Our acceptance of research findings changes as a function of what the authors call “acceptable regret,” i.e., our tolerance of making a wrong decision in accepting the research hypothesis. They illustrate their findings by providing a new framework for early stopping rules in clinical research (i.e., when should we accept early findings from a clinical trial indicating the benefits as true?).

“Obtaining absolute ‘truth’ in research, say Djulbegovic and Hozo, “is impossible, and so society has to decide when less-than-perfect results may become acceptable.”

Citations:

Moonesinghe R, Khoury MJ, Janssens ACJW (2007) Most published research fi ndings are false–But a little replication goes a long way. PLoS Med 4(2): e28. (http://dx.doi.org/10.1371/journal.pmed.0040028)

Djulbegovic B, Hozo I (2007) When should potentially false research findings be considered acceptable? PLoS Med 4(2): e26. (http://dx.doi.org/10.1371/journal.pmed.0040026)

Note: This story has been adapted from a news release issued by Public Library of Science.

Peirce, Popper, Laudan, … Good fallibilists all. None would claim, however, that when “doing science” the ideal scientist ought not have beliefs, since this would “imply care and concern about the results.” Care and concern is scientifically appropriate when it is for things like the accuracy and representativeness of the results. And to reinforce a point made by Janet, the evaluation of the results that actually issue from experiment shouldn’t be influenced by what anybody believed would transpire prior to the actual experiment. (Yes — psychologistically, expectations systematically bias perceptions. This is surely one place where a community is advantageious; but only if it is pluralistic rather than conformist.)

Popper’s falsification is a bit simplistic.

In practice you don’t throw away a very successful theory because one prediction was not observed. If you really did this we wouldn’t have any science left.

In reality you double/triple check the observations. Is there something wrong with the measurement? Is there a false assumption in the prediction? Can the theory be modified to incorporate the observation. Is this modification just an ad hoc excuse or does it lead to new testable predictions etc.

I think that Bob’s right about Peirce, and I would even strengthen the point. Peirce would would not only not urge the scientist to get rid of her beliefs – he would ridicule the proposition that this was either possible or desirable. Since beliefs for Peirce are fixed by repeated encounters with regularity, it’s futile to pretend that you’ve gotten rid of them. This was precisely his critique of Descartes: you can’t believe what you don’t believe, and Descartes didn’t really believe he was a brain in a vat since he had had no experiences that would lead him to believe that (note that this is different from positing and imagining hypothesis: hypothesis are tested through induction – or, more properly for Peirce, through abduction – before they are accepted; Descrates’s ostensible belief that he was being deceived by the demon served as a premise for further reasoning).

The point for Peirce would be that the scientist should be as aware as possible of her biases. More importantly, since some beliefs and biases will slip through, she should minimiize their effects by using fallibilistic methods and immersing herself in a community of inquirers.

To Chris Noble: It is not Popper’s falsification that is a bit simplistic but simplifications of Popper’s falsification which are.

(It’s probably not your fault, Kuhn and especially Feyerabend have to be blaimed for such simplifications much more.)